Unveiling the Epic Saga: The Astonishing Evolution of Artificial Intelligence

Unveiling the Epic Saga: The Astonishing Evolution of Artificial Intelligence

Introduction

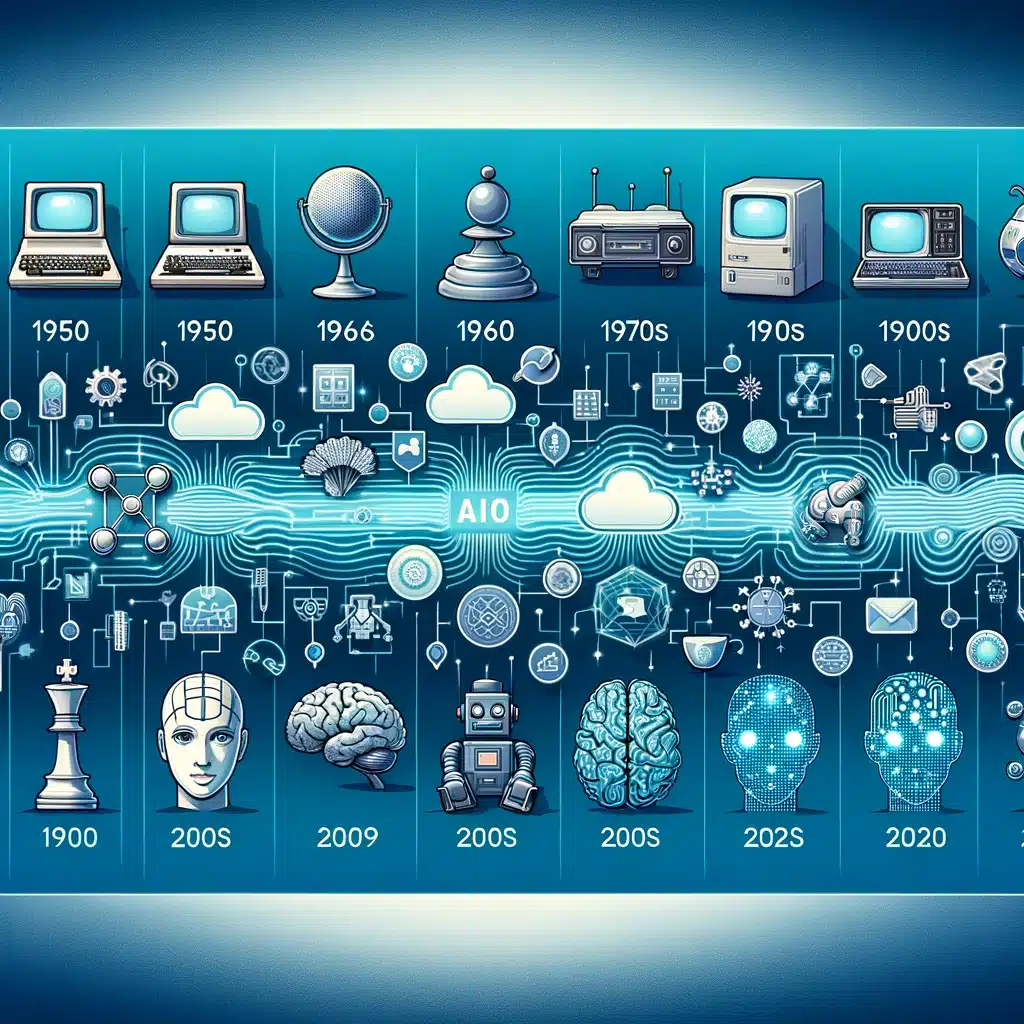

Artificial Intelligence (AI) has transformed from a fascinating concept in science fiction to a pivotal force in real-world technology. Today, AI touches every aspect of our lives, from the way we communicate to how we work and play. But how did we get here? This blog series delves into the rich history of AI, exploring its roots and tracing its evolution over the decades. Join us on a journey through time as we uncover how AI has reshaped our world and continues to push the boundaries of what machines can do.

The Genesis: 1950s

The story of AI began in the 1950s, an era brimming with curiosity and the drive to explore new frontiers of science and technology. During this decade, AI emerged from the realms of theoretical research to establish itself as a distinct field. The foundations were laid in the late 1930s with cybernetics, a discipline that studies control and communication in machines and living things, proposing that machines could be taught to mimic life.

One landmark event was the publication of a paper by Alan Turing in 1950, which introduced the idea of a machine that could simulate human thought processes. This paper not only proposed what is now known as the Turing Test but also sparked a series of debates and research that would shape the future of AI. The 1950s also saw the development of the first neural network machine, the SNARC, by Marvin Minsky, which further solidified AI as a serious academic endeavor.

AI in the 1960s: From Theory to Practice

Moving from theoretical concepts to tangible experiments, the 1960s marked a period of significant advancement in AI. Researchers began to develop algorithms and computer programs that could not only perform complex calculations but also mimic some forms of human decision-making. The decade saw the creation of the first AI-driven game programs and advances in natural language processing—computer programs could now play checkers or chess and even hold basic conversations in English.

This period also experienced the burgeoning of symbolic reasoning, with programs like the Logic Theorist by Allen Newell and Herbert A. Simon, which could solve complex puzzles and proofs, showcasing that machines could perform tasks previously thought to be exclusive to human cognition. The groundwork laid during this time was crucial, setting the stage for AI’s future integration into everyday technology.

The 1970s: AI Winter and Introspection

The 1970s began with high hopes following the advances of the 1960s, but the decade soon faced the realities of AI’s inherent limitations. This period is often referred to as the “AI Winter,” characterized by a significant reduction in funding and interest in AI research due to the overhyped expectations that could not be met. Computational power, which was limited at the time, posed a major barrier, hindering the development of more advanced AI applications.

Despite these setbacks, the decade was not devoid of progress. Researchers began exploring deeper into logic programming and enhanced commonsense reasoning, crucial for AI’s ability to understand and interact with the real world. It was during this time that the field also began to grapple with the complexities of machine learning, paving the way for future breakthroughs. This era of introspection helped clarify the path forward, focusing on achievable goals and setting more realistic expectations for AI’s capabilities.

The 1980s: AI Boom and the Rise of Expert Systems

Following the winter, the 1980s experienced a resurgence in AI interest and funding, often referred to as the “AI Boom.” This decade was marked by the rise of expert systems—AI programs designed to emulate the decision-making abilities of a human expert. These systems became incredibly popular in various industries, offering solutions that could diagnose diseases, predict weather patterns, and manage financial investments.

Expert systems like MYCIN and XCON were among the first to show that AI could have real-world applications, providing valuable advice on medical treatments and managing large inventories. This period also witnessed a renewed interest in neural networks, spurred by new algorithms and computational models that improved their efficiency and applicability. The global investment in AI research soared, with countries around the world recognizing the potential of AI technologies.

The 1990s: Integration into Technology and Society

The 1990s saw AI become more deeply integrated into both the technology sector and everyday life. This decade brought about the development of intelligent agents and an increased focus on solving specific, isolated problems with AI, which led to substantial advancements in areas such as machine learning, natural language processing, and robotics.

AI’s role during this time was pivotal yet often unrecognized; its algorithms improved logistics, manufacturing, and customer service, laying the groundwork for the modern AI applications we see today. Moreover, the 1990s introduced the world to more sophisticated forms of AI in games and personal assistants, which captivated the public’s imagination and highlighted AI’s potential to become a ubiquitous part of daily life.

Entering the 21st Century: AI Revolution

The early 2000s marked a significant shift in AI applications, as technology became more advanced and integrated into consumer products. This period saw the introduction of groundbreaking devices like the Roomba vacuum cleaner in 2002, showcasing AI’s potential to handle everyday tasks independently. Additionally, the Mars rovers, Spirit and Opportunity, demonstrated AI’s capabilities in navigating and analyzing alien terrains, representing a leap in autonomous technology.

During this time, the concept of “Big Data” emerged as a pivotal factor in AI development. The explosion in data collection and processing enabled by advances in computing power and storage technology allowed for more sophisticated machine learning techniques. AI began to excel in areas like image and speech recognition, significantly outperforming previous methods and sometimes even rivaling human capabilities.

The 2020s: AI Becomes Mainstream

In the current decade, AI is no longer just a tool for automating tasks or an experimental technology confined to labs. It has become a fundamental component of many industries, driving innovations in healthcare, finance, education, and entertainment. The release of advanced AI models like OpenAI’s ChatGPT has further demonstrated AI’s ability to understand and generate human-like text, engaging in conversations and providing insights that were previously unattainable.

The development of AI has also sparked discussions about ethics, privacy, and the future of employment, as machines begin to perform tasks traditionally done by humans. The integration of AI in daily life raises important questions about the balance between technological advancement and its societal impact.

AI’s Resilience and Future Direction

Reflecting on AI’s history, it’s clear that the field has experienced cycles of hype and disillusionment, yet it has consistently advanced and found new applications. The resilience of AI research and development has not only transformed industries but has also changed how we view machine intelligence and its potential.

As we look to the future, the boundaries between human and machine intelligence may continue to blur, with AI becoming more integrated into our cognitive processes and daily decision-making. The journey of AI, from theoretical constructs in the mid-20th century to an integral part of contemporary life, is a testament to human ingenuity and the relentless pursuit of knowledge.

Conclusion

From its humble beginnings in the 1950s to its pervasive presence today, AI has traveled a remarkable path. The history of artificial intelligence is not just a chronicle of technological advancement but a narrative of human ambition and visionary foresight. As we stand on the brink of potential future breakthroughs, the story of AI remains an unfolding chapter in the broader saga of human progress.

https://www.tableau.com/data-insights/ai/history